- Trang chủ >

- Linh kiện >

- Card VGA >

- NVIDIA Tesla >

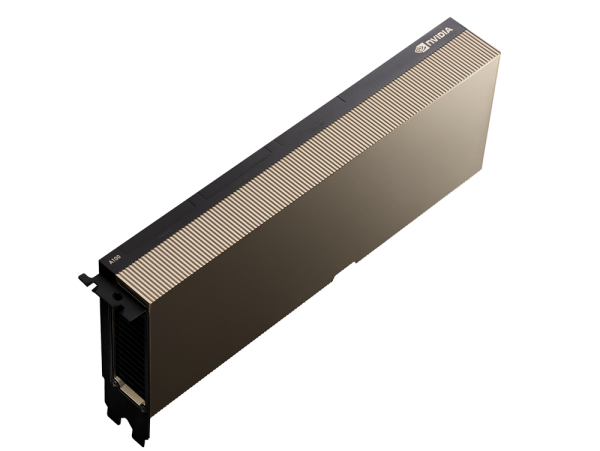

- NVIDIA H100 TENSOR CORE GPU

NVIDIA H100 TENSOR CORE GPU

Giá bán (chưa VAT): 0 ₫

| Form Factor | H100 SXM | H100 PCIe |

|---|---|---|

| FP64 | 30 teraFLOPS | 24 teraFLOPS |

| FP64 Tensor Core | 60 teraFLOPS | 48 teraFLOPS |

| FP32 | 60 teraFLOPS | 48 teraFLOPS |

| TF32 Tensor Core | 1,000 teraFLOPS* | 500 teraFLOPS | 800 teraFLOPS* | 400 teraFLOPS |

| BFLOAT16 Tensor Core | 2,000 teraFLOPS* | 1,000 teraFLOPS | 1,600 teraFLOPS* | 800 teraFLOPS |

| FP16 Tensor Core | 2,000 teraFLOPS* | 1,000 teraFLOPS | 1,600 teraFLOPS* | 800 teraFLOPS |

| FP8 Tensor Core | 4,000 teraFLOPS* | 2,000 teraFLOPS | 3,200 teraFLOPS* | 1,600 teraFLOPS |

| INT8 Tensor Core | 4,000 TOPS* | 2,000 TOPS | 3,200 TOPS* | 1,600 TOPS |

| GPU memory | 80GB | 80GB |

| GPU memory bandwidth | 3TB/s | 2TB/s |

| Decoders | 7 NVDEC 7 JPEG |

7 NVDEC 7 JPEG |

| Max thermal design power (TDP) | 700W | 350W |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | |

| Form factor | SXM | PCIe |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLINK: 600GB/s PCIe Gen5: 128GB/s |

| Server options | NVIDIA HGX™ H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX™ H100 with 8 GPUs | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

Tài liệu kỹ thuật

Driver

| Loại Driver | Tên driver | Hệ điều hành | Download |

|---|

Sản phẩm cùng danh mục

BACK TO TOP

.jpg)